UP AHEAD: Six Years of Low Expectations for Students with Disabilities

Over the past few months states have been busy formulating new annual targets for their state performance plans (SPP) for FFY 2020-2025. The new 6 year targets were to be submitted to the Office of Special Education Programs (OSEP) at the U.S. Dept. of Education along with states’ SPP Annual Performance Report (APR) on February 1, 2022.

These new targets were to be developed with stakeholder involvement, as this OSEP memo points out. SPP targets are used to annually review states’ performance on the implementation of the Individuals with Disabilities Education Act (IDEA). And, in turn, states use the targets to evaluate IDEA implementation of local school districts. The SPP/APR submissions are currently under review at OSEP – including the new targets for FFY 2020-2025.

Here’s the problem …

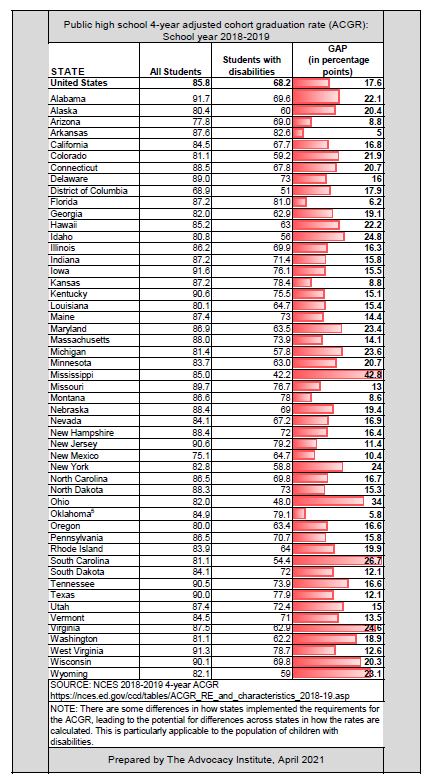

Based on information shared with stakeholders in several states (see AR, CO, FL, KY, MD, SD) the data being used to set 6 years of expectations on the participation and performance of students with disabilities on state assessments (known as SPP Indicator 3) are data from the state assessments conducted in the 2020-2021 school year.

This is a BIG problem since the participation and performance of students with disabilities in 2020-2021 was heavily impacted by continued school closures, remote instruction, high absenteeism as well as lack of implementation of students’ Individualized Education Programs (IEPs) and a shortage of qualified special education and related services personnel.

So … using data from 2020-2021 to set targets on participation and performance for the next 6 years ensures low expectations. Essentially, the learning loss of students with disabilities will be baked into performance targets for 6 years!

As the U.S. Dept. of Education’s Office for Civil Rights reported in Education in a Pandemic: The Disparate Impacts of COVID-19 on America’s Students, “[f]or many elementary and secondary school students with disabilities, COVID-19 has significantly disrupted the education and related aids and services needed to support their academic progress and prevent regression. And there are signs that those disruptions may be exacerbating longstanding disability-based disparities in academic achievement.”

Now, setting 6 years of annual targets for performance on state assessments in math and reading based on 2020-2021 results will exacerbate the disparate impact of COVID-19.

According to this article from the Region 15 Comprehensive Center (funded by the U.S. Dept. of Ed):

“While 2021 assessment data can still be a helpful barometer of how well educators and schools supported students’ grade-level learning, it is not appropriate to use these data alone to make inferences about student success or school quality, particularly if such inferences are attached to significant decisions or consequences. To avoid drawing incorrect conclusions from assessment data about student success or school quality, policymakers and education leaders should consider lowering or removing any high stakes attached to 2021 assessment results.”

This Education Week article on results of 2021 testing points out “even though educators are hungry for insight, assessment experts are urging caution. This year, more than any in recent memory, calls for extreme care and restraint when analyzing statewide test scores, drawing conclusions, and taking action, they say.”

And, as this NCIEA article points out, efforts should be made to “minimize the long-term influence of ‘fragile indicators’ such as proficiency rates when forced to use the imperfect assessment data from 2020-2021.”

Allowing states to set SPP targets using 2020-2021 state assessment data is sure to maximize the impact of COVID-19 on students with disabilities for years to come. Buckle up.